Ridgeback Tutorials

Ridgeback Overview

Introduction

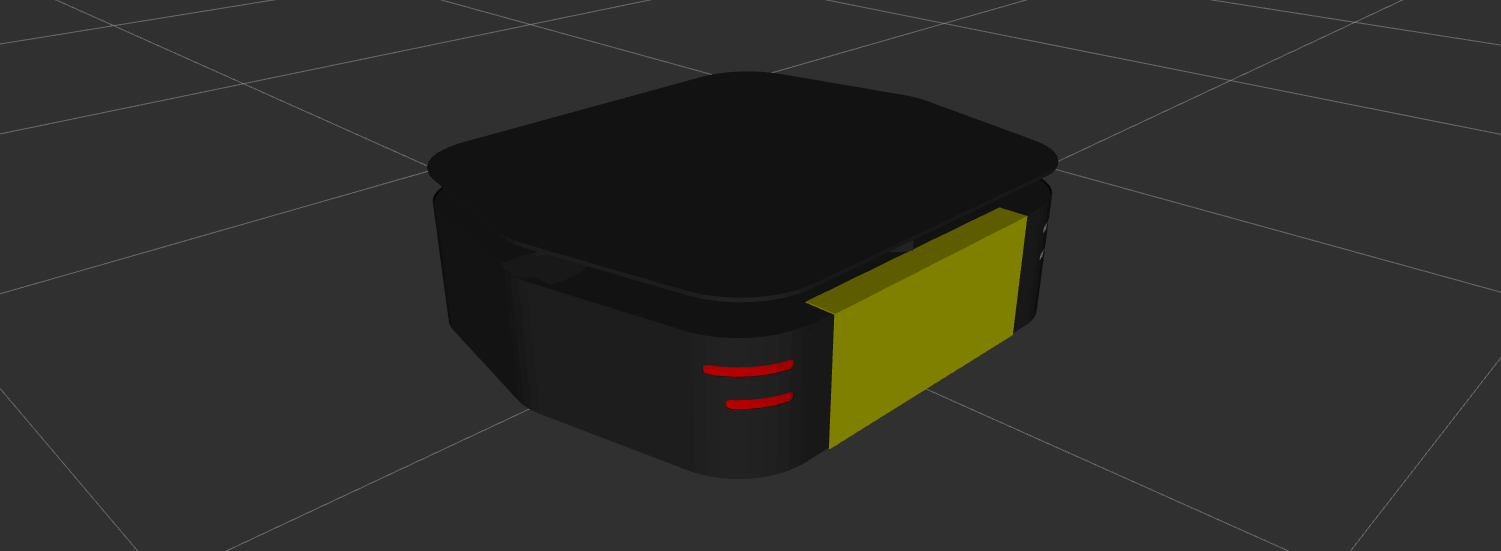

Ridgeback is a rugged, indoor omnidirectional platform designed to move manipulators and other heavy payloads with ease and precision. These tutorials will assist you with setting up and operating your Ridgeback. The tutorial topics are listed in the right column and presented in the suggested reading order.

For more information or to receive a quote, please visit us online.

These tutorials assume that you are comfortable working with ROS. We recommend starting with our ROS tutorial if you are not familiar with ROS already.

These tutorials specifically target Ridgeback robots running Ubuntu 20.04 with ROS Noetic, as it is the standard OS environment for Ridgeback. If instead you have an older Ridgeback robot running Ubuntu 18.04 with ROS Melodic, please follow this tutorial to upgrade the robot OS environment to Ubuntu 20.04 with ROS Noetic.

Ridgeback ROS Packages provides the references for the software packages and key ROS topics.

Ridgeback Software Setup outlines the steps for setting up the software on your Ridgeback robot and optionally on a remote computer.

Using Ridgeback describes how to simulate and drive your Ridgeback. Simulation is a great way for most users to learn more about their Ridgeback; understanding how to effectively operate Ridgeback in simulation is valuable whether you are in the testing phase with software you intend to ultimately deploy on a physical Ridgeback or you do not have one and are simply exploring the platform's capabilities. Driving Ridgeback covers how to teleoperate Ridgeback using the remote control, as well as safety procedures for operating the physical robot. Anyone working with a physical robot should be familiar with this section.

Navigating Ridgeback is a follow-on to what is learned in the Simulation tutorial, as navigation and map-making may be run in the simulated environment. However, this content is applicable to both the simulator and the real platform, if your Ridgeback is equipped with a laser scanner.

Ridgeback Tests outlines how to validate that your physical Ridgeback is working correctly.

Advanced Topics covers items that are only required in atypical situations.

Ridgeback ROS Packages

Ridgeback fully supports ROS; all of the packages are available in Ridgeback Github.

Description Package

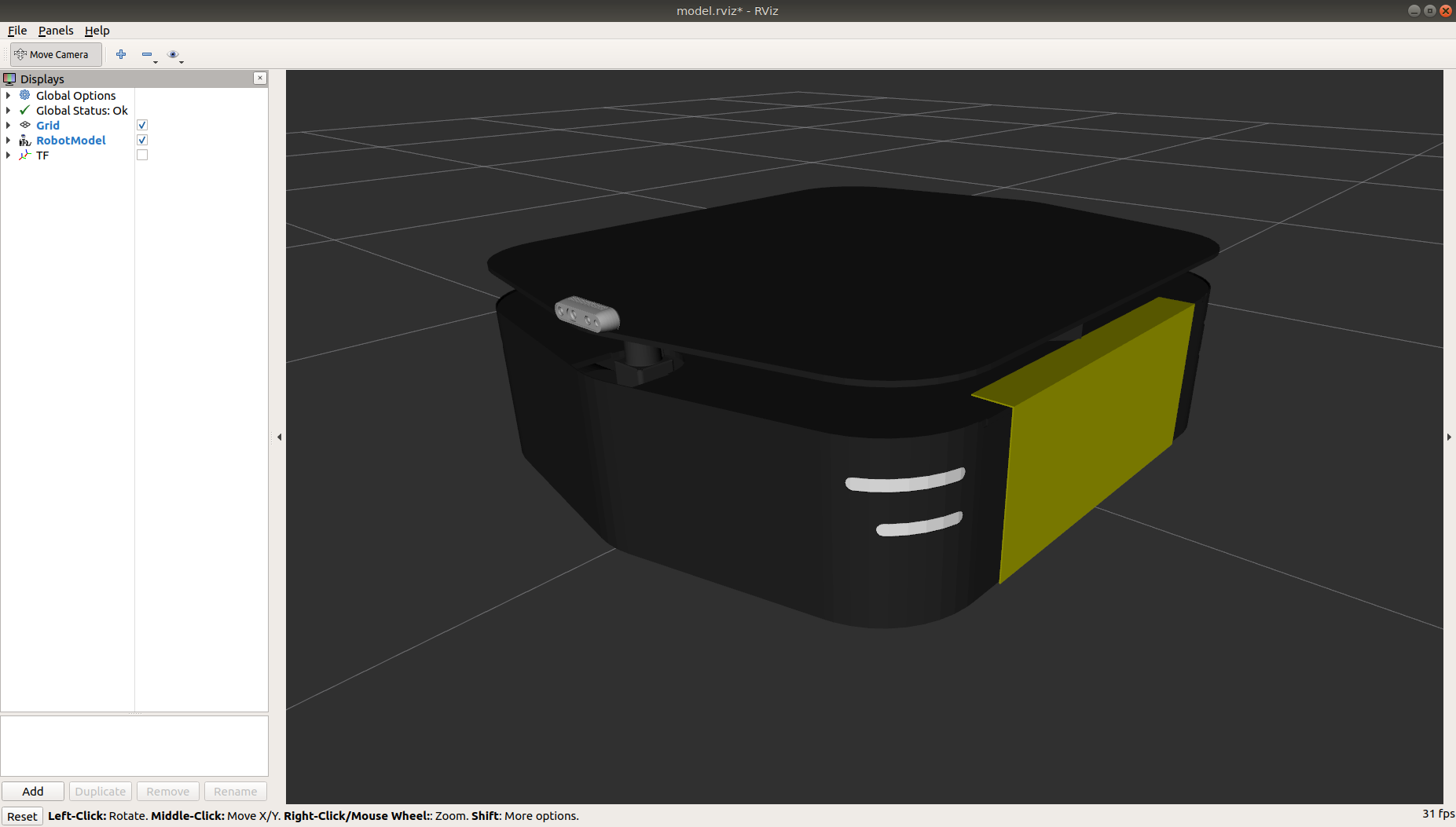

The ridgeback_description repository provides a URDF model of Ridgeback.

Ridgeback's URDF model can be visualized in RViz. Once you have installed the desktop software in an upcoming tutorial, you will be able to run:

roslaunch ridgeback_viz view_model.launch

Environment Variables

Ridgeback can be customized and extended through the use of several environment variables. The details are in the ridgeback_description repository. Some of the most important ones are listed below.

| Variable | Default | Description |

|---|---|---|

RIDGEBACK_FRONT_HOKUYO_LASER | 0 | Set to 1 if the robot is equipped with a front-facing Hokuyo LIDAR unit (e.g. UST10) |

RIDGEBACK_REAR_HOKUYO_LASER | 0 | Set to 1 if the robot is equipped with a rear-facing Hokuyo LIDAR unit (e.g. UST10) |

RIDGEBACK_FRONT_SICK_LASER | 0 | Set to 1 if the robot is equipped with a front-facing SICK LMS-111 LIDAR unit |

RIDGEBACK_REAR_SICK_LASER | 0 | Set to 1 if the robot is equipped with a rear-facing SICK LMS-111 LIDAR unit |

RIDGEBACK_MICROTRAIN_IMU | 0 | Set to 1 if the robot is equipped with a Microstrain IMU |

Configurations

As an alternative to individually specifying each accessory, some fixed configurations are provided in the package.

These can be specified using the config arg to description.launch, and are intended especially as a convenience for simulation launch.

| Config | Description |

|---|---|

base | Base Ridgeback |

base_sick | Ridgeback with front SICK laser |

dual_hokuyo_lasers | Ridgeback with front and rear Hokuyo lasers |

dual_sick_lasers | Ridgeback with front and rear SICK lasers |

Key ROS Topics

You can view all topics that are active using rostopic list.

The most important topics are summarized in the table below.

| Topic | Message Type | Purpose |

|---|---|---|

/cmd_vel | geometry_msgs/Twist | Input to Ridgeback's kinematic controller. Publish here to make Ridgeback go. |

/odometry/filtered | nav_msgs/Odometry | Published by robot_localization, a filtered localization estimate based on wheel odometry (encoders) and integrated IMU. |

/imu/data | sensor_msgs/IMU | Published by imu_filter_madgwick, an orientation estimated based on the Ridgeback's internal IMU. |

/mcu/status | ridgeback_msgs/Status | Low-frequency status data for Ridgeback's systems. This information is republished in human readable form on the diagnostics topic and is best consumed with the Robot Monitor. |

/mcu/cmd_fans | ridgeback_msgs/Fans | User can publish to this topic to control an the installed fans. |

/cmd_lights | ridgeback_msgs/Lights | User can publish to this topic to override the default behavior of the Ridgeback body lights. |

Ridgeback Software Setup

Backing Up Robot Configuration

Upgrading your Clearpath Ridgeback to ROS Noetic from older ROS distributions is a straightforward process; however it's important to understand that each Ridgeback is different, having undergone customization to your specifications. For more complete upgrade instructions see this guide.

Please take the time to understand what these modifications are, and how to recreate them on your fresh install of Ubuntu Focal/ROS Noetic.

Performing a Backup

-

As a fail-safe, please make an image of your robot's hard drive. You should always be able to restore this image if you need to revert back to your previous configuration.

- The easiest approach may be to either connect a removable (USB or similar) hard drive to the robot's computer, or to unplug the robot's hard drive and insert it into a computer or workstation.

- You can then use a tool such as CloneZilla or

ddto write a backup image of your robot's hard drive onto another hard drive. - Alternatively, you can simply replace the robot computer's hard drive, reserving the drive and installing a new one to use with Noetic.

-

There are several places in the filesystem you should specifically look for customizations for your robot:

Location Description /etc/network/interfacesor/etc/netplan/*Your robot may have a custom network configuration in this file. /etc/ros/*/*-core.d/*.launchWill contain base.launchanddescription.launch, may contain custom launch files for your robot configuration. Replaced byros.din newer versions./etc/ros/*/ros.d/*.launchWill contain base.launchanddescription.launch, may contain custom launch files for your robot configuration. Replaces*-core.din newer versions./etc/ros/setup.bashMay contain environment variables for your configuration. -

Please save all the files listed above and use them as a reference during Noetic configuration.

Installing and Configuring Robot Software

Installing Ridgeback Software

The physical Ridgeback robot comes pre-configured with ROS and the necessary Ridgeback packages already installed; therefore, you will only need to follow the instructions below if you are re-installing software on the Ridgeback.

There are three methods to install software on the physical robot.

The preferred method is using the Clearpath Robotics ISO image, which is covered in this section.

The second method is using Debian (.deb) packages, which is also covered in this section.

The final approach is installing from source by directly cloning Clearpath Robotics Github repositories and building them in your ROS (catkin) workspace; however, this method is not covered in this section.

Install from ISO Image

Installing with the Clearpath Robotics ISO image will completely wipe data on the robot's computer, since the ISO image will install Ubuntu 20.04 (Focal), ROS Noetic, and robot-specific packages.

The Clearpath Robotics ISO image only targets Intel-family computers (amd64 architecture).

If your robot runs on an Nvidia Jetson computer, see

Jetson Software for software installation details.

Clearpath provides a lightly customized installation image of Ubuntu 20.04 "Focal" that automatically pulls in all necessary dependencies for the robot software. To install the software on a physical robot through the Clearpath Robotics ISO image, you will first need a USB drive of at least 2 GB to create the installation media, an ethernet cable, a monitor, and a keyboard.

-

Download the appropriate Noetic ISO image for your platform.

-

Copy the image to a USB drive using

unetbootin,rufus,balena etcher, or a similar program. For example:sudo unetbootin isofile="clearpath-universal-noetic-amd64-0.4.17.iso" -

Connect your robot computer to internet access (via wired Ethernet), a keyboard, and a monitor. Make sure that the robot is connected to shore power (where applicable) or that the robot's battery is fully charged.

cautionThe next step wipes your robot's hard drive, so make sure you have that image backed up.

-

Boot your robot computer from the USB drive and let the installer work its magic. If asked for a partitioning method choose

Guided - use entire disk and set up LVM.noteYou may need to configure the computer's BIOS to prioritize booting from the USB drive. On most common motherboards, pressing

Deleteduring the initial startup will open the BIOS for configuration. -

The setup process will be automated and may take a long time depending on the speed of your internet connection.

-

Once the setup process is complete, the computer will turn off. Please unplug the USB drive and turn the computer back on.

-

On first boot, the username will be

administratorand the password will beclearpath. You should use thepasswdutility to change theadministratoraccount password. -

To set up a factory-standard robot, ensure all your peripherals are plugged in, and run the following command, which will configure a ros upstart service, that will bring up the base robot launch files on boot. The script will also detect any standard peripherals (IMU, GPS, etc.) you have installed and add them to the service.

- Husky

- Jackal

- Dingo

- Ridgeback

- Warthog

- Boxer

rosrun husky_bringup install

sudo systemctl daemon-reload

rosrun jackal_bringup install

sudo systemctl daemon-reload

rosrun dingo_bringup install

sudo systemctl daemon-reload

rosrun ridgeback_bringup install

sudo systemctl daemon-reload

rosrun warthog_bringup install

sudo systemctl daemon-reload

rosrun boxer_bringup install

sudo systemctl daemon-reload

-

Finally, start ROS for the first time. In terminal, run:

sudo systemctl start ros

Installing from Debian Packages

If you are installing software on a physical robot through Debian packages, you will first need to ensure that the robot's computer is running Ubuntu 20.04 (Focal) and ROS Noetic.

-

Before you can install the robot packages, you need to configure Ubuntu's APT package manager to add Clearpath's package server.

-

Install the authentication key for the packages.clearpathrobotics.com repository. In terminal, run:

wget https://packages.clearpathrobotics.com/public.key -O - | sudo apt-key add - -

Add the debian sources for the repository. In terminal, run:

sudo sh -c 'echo "deb https://packages.clearpathrobotics.com/stable/ubuntu $(lsb_release -cs) main" > /etc/apt/sources.list.d/clearpath-latest.list' -

Update your computer's package cache. In terminal, run:

sudo apt-get update

-

-

After the robot's computer is configured to use Clearpath's debian package repository, you can install the robot-specific packages.

-

On a physical robot, you should only need the robot packages. In terminal, run:

- Husky

- Jackal

- Dingo

- Ridgeback

- Warthog

- Boxer

sudo apt-get install ros-noetic-husky-robotsudo apt-get install ros-noetic-jackal-robotsudo apt-get install ros-noetic-dingo-robotsudo apt-get install ros-noetic-ridgeback-robotsudo apt-get install ros-noetic-warthog-robotsudo apt-get install ros-noetic-boxer-robot -

Install the

robot_upstartjob and configure the bringup service so that ROS will launch each time the robot starts. In terminal, run:- Husky

- Jackal

- Dingo

- Ridgeback

- Warthog

- Boxer

rosrun husky_bringup install

sudo systemctl daemon-reload

rosrun jackal_bringup install

sudo systemctl daemon-reload

rosrun dingo_bringup install

sudo systemctl daemon-reload

rosrun ridgeback_bringup install

sudo systemctl daemon-reload

rosrun warthog_bringup install

sudo systemctl daemon-reload

rosrun boxer_bringup install

sudo systemctl daemon-reload

-

Finally, start ROS for the first time. In terminal, run:

sudo systemctl start ros

-

Testing Base Configuration

You can check that the service has started correctly by checking the logs:

sudo journalctl -u ros

Your Ridgeback should now be accepting commands from your joystick (see next section). The service will automatically start each time you boot your Ridgeback's computer.

Pairing the Controller

PS4 Controller

To pair a PS4 controller to your robot:

-

Ensure your controller's battery is charged.

-

SSH into the robot. The remaining instructions below assume you are already SSH'd into the robot.

-

Make sure the

ds4drvdriver is installed, and theds4drvdaemon service is active and running:sudo systemctl status ds4drv -

If

ds4drvis not installed, install it by running:sudo apt-get install python-ds4drv -

Put the controller into pairing mode by pressing and holding the SHARE and PS buttons until the controller's LED light bar flashes rapidly in white.

-

Run the controller pairing script:

sudo ds4drv-pair -

In the output of the

ds4drv-pairscript, you should see that the controller automatically pairs via the script. The controller's LED light bar should also turn solid blue to indicate successful pairing.

Alternatively, if ds4drv-pair fails to detect the controller, you can pair the controller using bluetoothctl:

-

Install the

bluezpackage if it is not installed already by running:sudo apt-get install bluez -

Run the

bluetoothctlcommand:sudo bluetoothctl -

Enter the following commands in

bluetoothctlto scan and display the MAC addresses of nearby devices:agent on

scan on -

Determine which MAC address corresponds to the controller and copy it. Then run the following commands in

bluetoothctlto pair the controller:scan off

pair <MAC Address>

trust <MAC Address>

connect <MAC Address> -

The controller should now be correctly paired.

Setting up Ridgeback's Network Configuration

Ridgeback is normally equipped with a combination Wi-Fi + Bluetooth module. If this is your first unboxing, ensure that Ridgeback's wireless antennae are firmly screwed on to the chassis.

First Connection

By default, Ridgeback's Wi-Fi is in client mode, looking for the wireless network at the Clearpath factory.

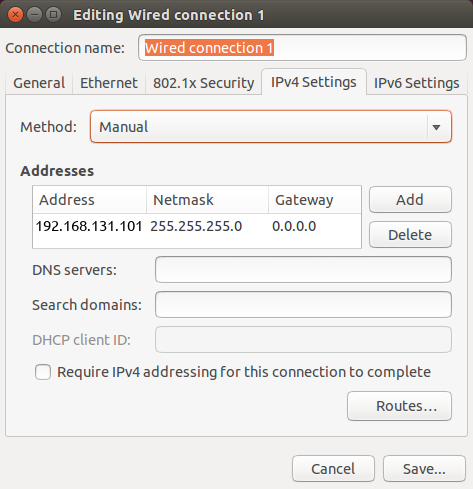

Set your laptop's ethernet port to a static IP such as 192.168.131.101. To do this in Ubuntu, follow the steps below:

- Click on the Wi-Fi icon in the upper-right corner of your screen, and select Edit Connections.

- In the Network Connections window, under Ethernet, select your wired connection and then click Edit.

- Select the IPv4 Settings tab and then change the Method to Manual.

- Click the Add button to add a new address.

- Enter a

192.168.131.101as the static IP under the Address column, and enter255.255.255.0under the Netmask column, and then select Save.

The next step is to connect to your robot via SSH. To do so execute the following in a terminal window:

ssh administrator@192.168.131.1

You will be promoted to enter a password. The default password is clearpath and you set a new password

on first connection.

Changing the Default Password

All Clearpath robots ship from the factory with their login password set to clearpath. Upon receipt of your

robot we recommend changing the password.

To change the password to log into your robot, run the following command:

passwd

This will prompt you to enter the current password, followed by the new password twice. While typing the

passwords in the passwd prompt there will be no visual feedback (e.g. "*" characters).

To further restrict access to your robot you can reconfigure the robot's SSH service to disallow logging in with a password and require SSH certificates to log in. This tutorial covers how to configure SSH to disable password-based login.

Wi-Fi Setup

Now that you are connected via SSH over a wired connection using the steps above, you can set up your robot's computer

(running Ubuntu 20.04) to connect to a local Wi-Fi network. (For legacy systems running Ubuntu 18.04, use

wicd-curses instead.)

Clearpath robots running Ubuntu 20.04 and later use netplan for configuration of their wired and wireless interfaces.

To connect your robot to your wireless network using netplan, create the file /etc/netplan/60-wireless.yaml and fill in the following:

network:

wifis:

# Replace WIRELESS_INTERFACE with the name of the wireless network device, e.g. wlan0 or wlp3s0

# Fill in the SSID and PASSWORD fields as appropriate. The password may be included as plain-text

# or as a password hash. To generate the hashed password, run

# echo -n 'WIFI_PASSWORD' | iconv -t UTF-16LE | openssl md4 -binary | xxd -p

# If you have multiple wireless cards you may include a block for each device.

# For more options, see https://netplan.io/reference/

WIRELESS_INTERFACE:

optional: true

access-points:

SSID_GOES_HERE:

password: PASSWORD_GOES_HERE

dhcp4: true

dhcp4-overrides:

send-hostname: true

Modify the following variables in the file:

- Replace

WIRELESS_INTERFACEwith the name of the robot's Wi-Fi interface (e.g. wlan0, wlp2s0, or wlp3s0). - Replace

SSID_GOES_HEREwith the name of the local Wi-Fi network. - Replace

PASSWORD_GOES_HEREwith the password of the local Wi-Fi network.

Once you are done modifying the file, save it by pressing CTRL + O, then ENTER.

Close the file by pressing CTRL + X.

Then, run the following to bring up the Wi-Fi connection:

sudo netplan apply

You can validate that the connection was successful and determine the IP address of the Wi-Fi interface by running:

ip a

A list of network connections will be displayed within the terminal. Locate the wireless network and make note of its IP address.

Now that you know robot's wireless IP address, you may now exit the Ethernet SSH session by executing

exit.

Remove the Ethernet cable and close up your robot. Now you can SSH into your robot over the wireless network. To do so, execute:

ssh administrator@<IP_OF_ROBOT>

SSH sessions allow you to control your robot's internal computer. You can do various things such as download packages, run updates, add/remove files, transfer files etc.

Installing Remote Computer Software

This step is optional.

It is often convenient to use a Remote Computer (eg. laptop) to command and observe your robot. To do this, your Remote Computer must be configured correctly.

-

Perform a basic ROS installation. See here for details.

-

Install the desktop packages:

- Husky

- Jackal

- Dingo

- Ridgeback

- Warthog

- Boxer

sudo apt-get install ros-noetic-husky-desktopsudo apt-get install ros-noetic-jackal-desktopsudo apt-get install ros-noetic-dingo-desktopsudo apt-get install ros-noetic-ridgeback-desktopsudo apt-get install ros-noetic-warthog-desktopsudo apt-get install ros-noetic-boxer-desktop -

Configure Remote ROS Connectivity.

Click to expand

To use ROS desktop tools, you will need the Remote Computer to be able to connect to your robot's ROS master. This will allow you to run ROS commands like

rostopic list,rostopic echo,rosnode list, and others, from the Remote Computer and the output will reflect the activity on your robot's ROS master, rather than on the Remote Computer. This can be a tricky process, but we have tried to make it as simple as possible.In order for the ROS tools on the Remote Computer to talk to your robot, they need to know two things:

- How to find the ROS master, which is set in the

ROS_MASTER_URIenvironment variable, and - How processes on the ROS master can find the Remote Computer, which is the

ROS_IPenvironment variable.

The suggested pattern is to create a file in your home directory called

remote-robot.shwith the following contents:export ROS_MASTER_URI=http://cpr-robot-0001:11311 # Your robot's hostname

export ROS_IP=10.25.0.102 # Your Remote Computer's wireless IP addressIf your network does not already resolve your robot's hostname to its wireless IP address, you may need to add a corresponding line to the Remote Computer's

/etc/hostsfile:10.25.0.101 cpr-robot-0001noteYou can verify the hostname and IP address of your robot using the following commands during an SSH session with the Onboard Computer.

hostname

hostname -iThen, when you are ready to communicate remotely with your robot, you can source that script like so, thus defining those two key environment variables in the present context.

source remote-robot.shTo verify that everything is set up properly, try running a few ROS commands:

rosrun rqt_robot_monitor rqt_robot_monitor

rosrun rqt_console rqt_consoleYou can also run the RViz commands outlined in the Tutorials.

If the tools launch, then everything is setup properly. If you still need assistance in configuring remote access, please contact Clearpath Support. For more general details on how ROS works over TCP with multiple machines, please see: http://wiki.ros.org/ROS/Tutorials/MultipleMachines. For help troubleshooting a multiple machines connectivity issue, see: http://wiki.ros.org/ROS/NetworkSetup.

- How to find the ROS master, which is set in the

-

From your Remote Computer, try launching RViz, the standard ROS robot visualization tool:

- Husky

- Jackal

- Dingo

- Ridgeback

- Warthog

- Boxer

roslaunch husky_viz view_robot.launchroslaunch jackal_viz view_robot.launchroslaunch dingo_viz view_robot.launchroslaunch ridgeback_viz view_robot.launchroslaunch warthog_viz view_robot.launchroslaunch boxer_viz view_robot.launchFrom within RViz, you can use interactive markers to drive your robot, you can visualize its published localization estimate and you can visualize any attached sensors which have been added to its robot description XML URDF.

Adding a Source Workspace

Configuring non-standard peripherals requires a source workspace on the robot computer.

The instructions below use cpr_noetic_ws as the workspace name. You can choose a different

workspace name and substitute it in the commands below.

-

Create a new workspace:

mkdir -p ~/cpr_noetic_ws/src -

Add any custom source packages to the

~/cpr_noetic_ws/srcdirectory. -

After adding your packages, make sure any necessary dependencies are installed:

cd ~/cpr_noetic_ws/

rosdep install --from-paths src --ignore-src --rosdistro noetic -y -

Build the workspace:

cd ~/cpr_noetic_ws/

catkin_make -

Modify your robot-wide setup file (

/etc/ros/setup.bash) to source your new workspace instead of the base noetic install:source /home/administrator/cpr_noetic_ws/devel/setup.bash -

Reinitialize your environment so that it picks up your new workspace:

source /etc/ros/setup.bash

Using Ridgeback

Simulating Ridgeback

Whether you actually have a Ridgeback robot or not, the Ridgeback simulator is a great way to get started with ROS robot development. In this tutorial, we will go through the basics of starting Gazebo and RViz and how to drive your Ridgeback around.

Installation

To get started with the Ridgeback simulation, make sure you have a working ROS installation set up on your Ubuntu desktop, and install the Ridgeback-specific metapackages for desktop and simulation:

sudo apt-get install ros-noetic-ridgeback-simulator ros-noetic-ridgeback-desktop

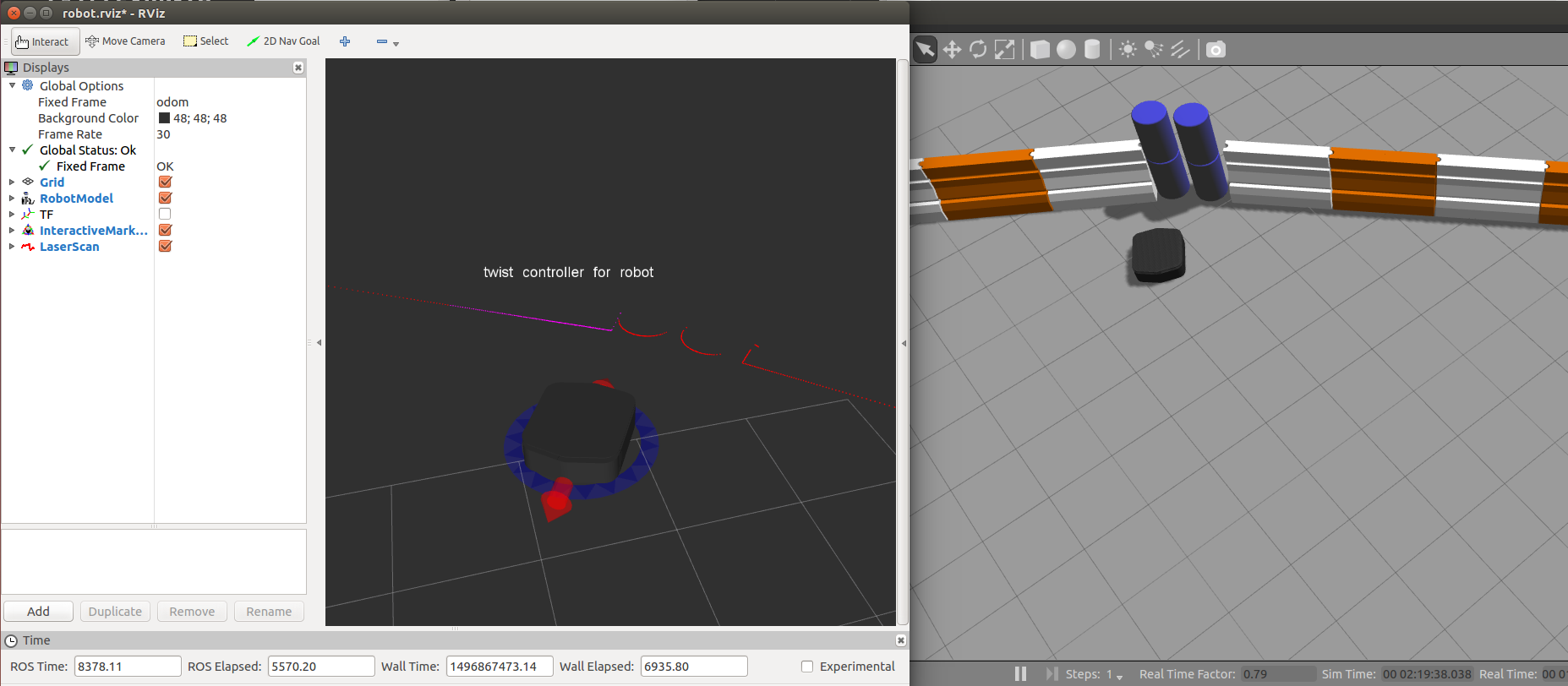

Launching Gazebo

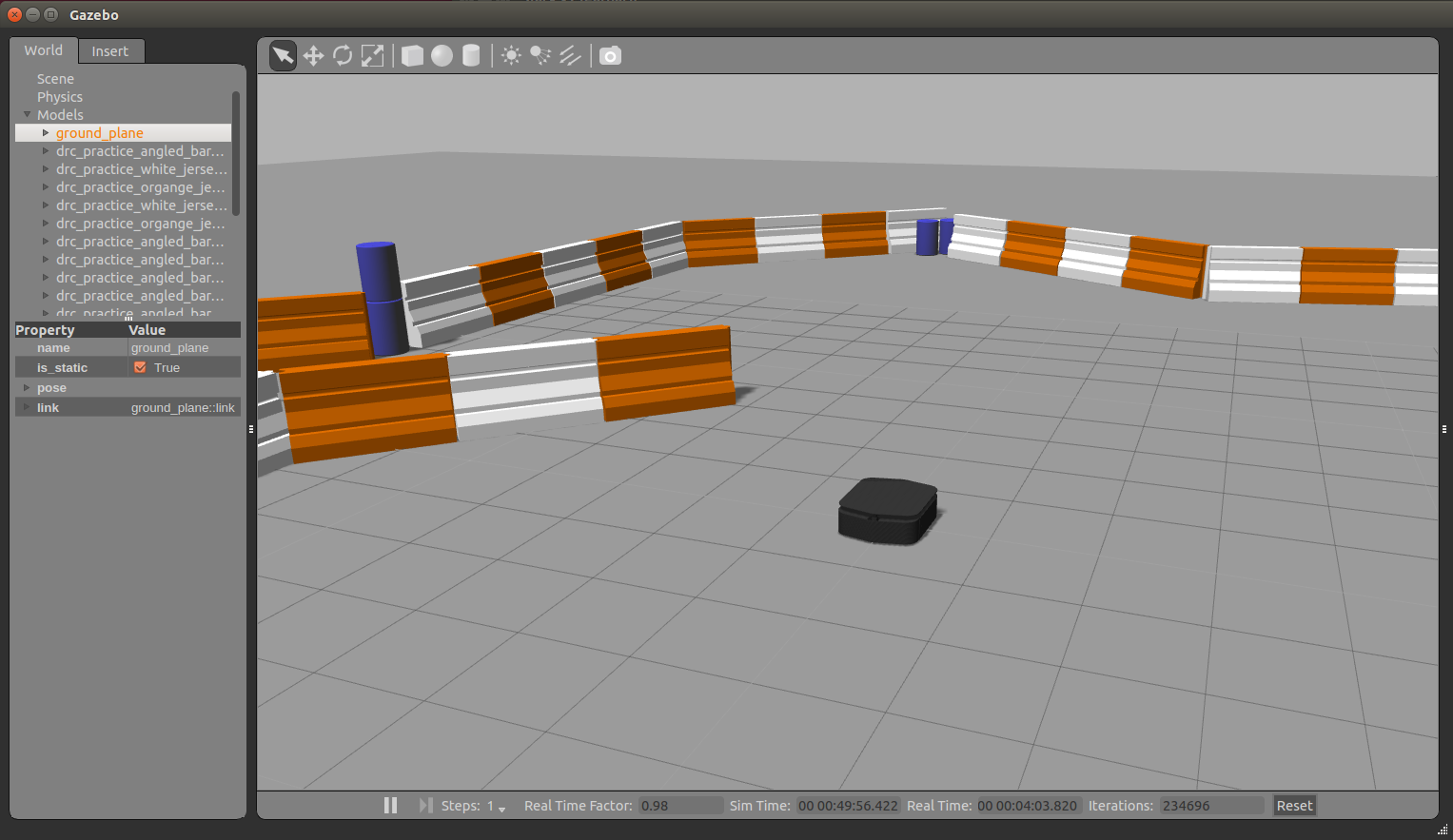

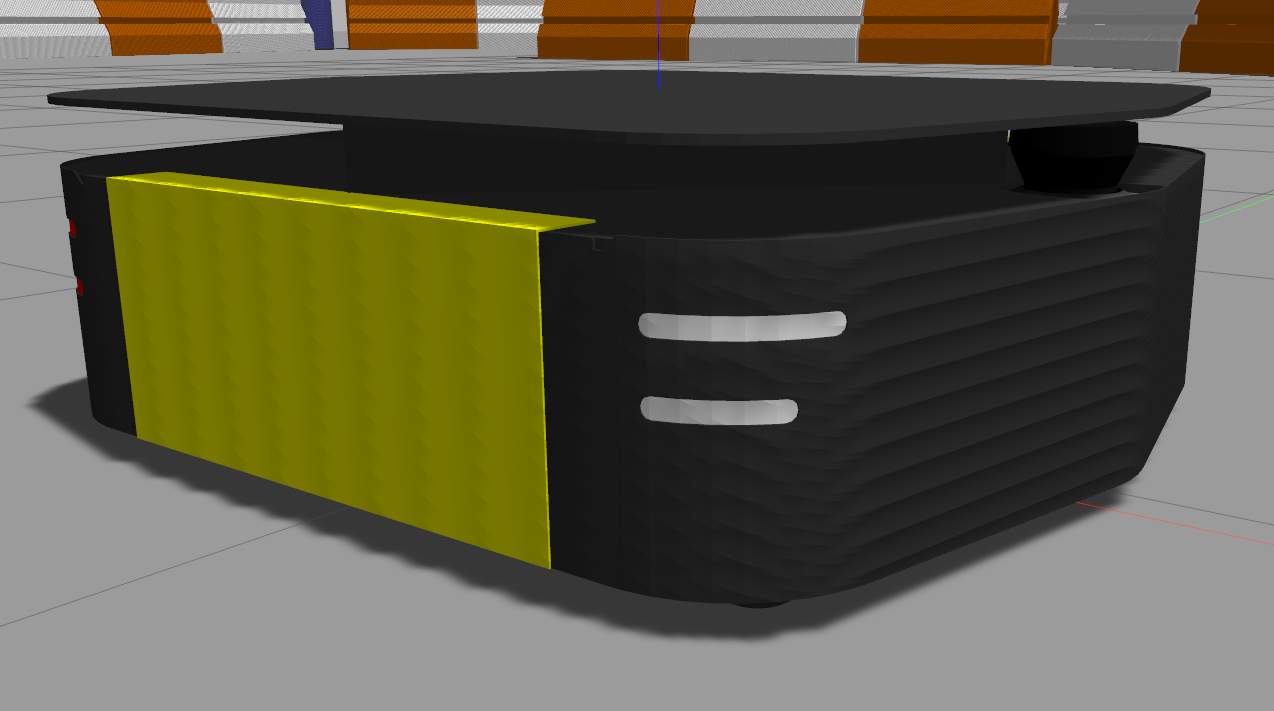

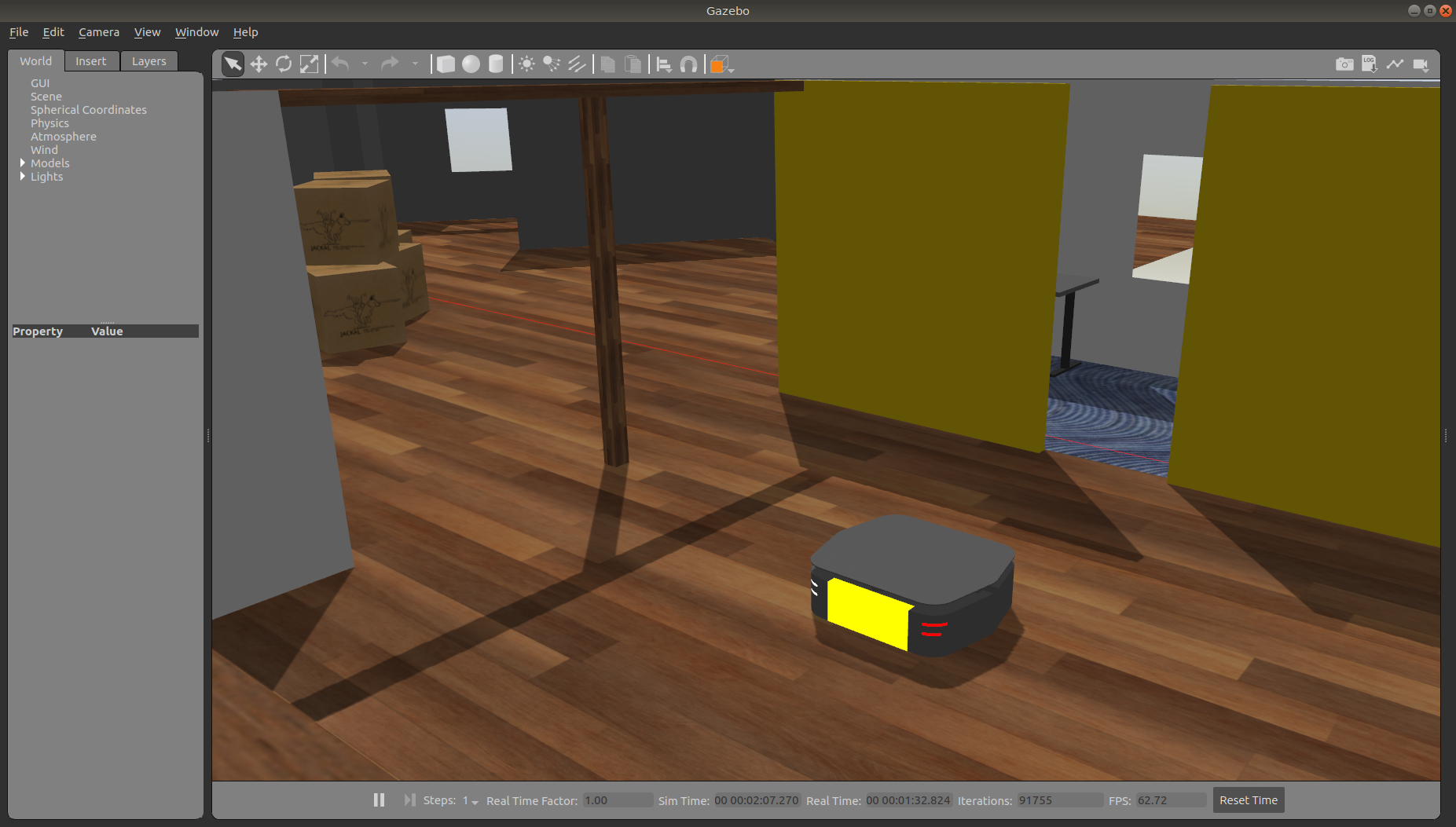

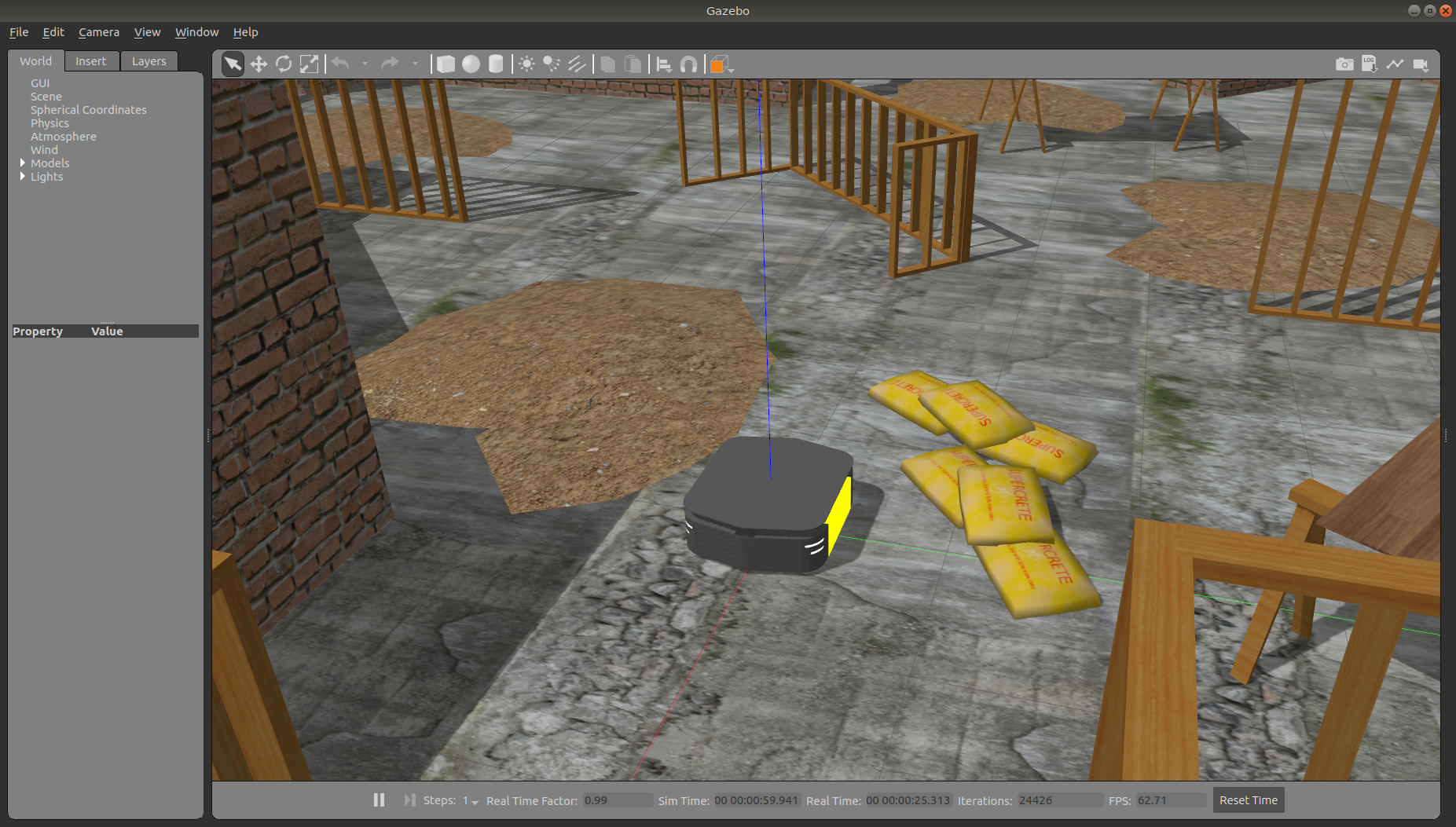

Gazebo is the most common simulation tool used in ROS. Ridgeback's model in Gazebo include reasonable approximations of its dynamics, including wheel slippage, skidding, and inertia. To launch simulated Ridgeback in a simple example world, run the following command:

roslaunch ridgeback_gazebo ridgeback_world.launch

You should see the following window appear, or something like it. You will see a base Ridgeback spawned with no additional sensors. You can adjust the camera angle by clicking and dragging while holding CTRL, ALT, or the Shift key.

The window which you are looking at is the Gazebo Client. This window shows you the "true" state of the simulated world which the robot exists in. It communicates on the backend with the Gazebo Server, which is doing the heavy lifting of actually maintaining the simulated world. At the moment, you are running both the client and server locally on your own machine, but some advanced users may choose to run heavy duty simulations on separate hardware and connect to them over the network.

When simulating, you must leave Gazebo running. Closing Gazebo will prevent other tools, such as RViz (see below) from working correctly.

See also Additional Simulation Worlds.

Simulation Configs

Note that like Ridgeback itself, Ridgeback's simulator comes in multiple flavours called configs.

A common one which you will need often is the base_sick config.

If you close the Gazebo window, and then CTRL-C out of the terminal process, you can re-launch the simulator with a specific config.

roslaunch ridgeback_gazebo ridgeback_world.launch config:=base_sick

You should now see the simulator running with the simulated SICK LMS-111 laser present.

Gazebo not only simulates the physical presence of the laser scanner, it also provides simulated data which reflects the robot's surroundings in its simulated world. We will visualize the simulated laser scanner data shortly.

Interfacing with Ridgeback

Both simulated and real Ridgeback robots expose the same ROS interface and can be interacted with in the same way.

Please make sure that the desktop packages for Ridgeback are installed:

sudo apt-get install ros-noetic-ridgeback-desktop

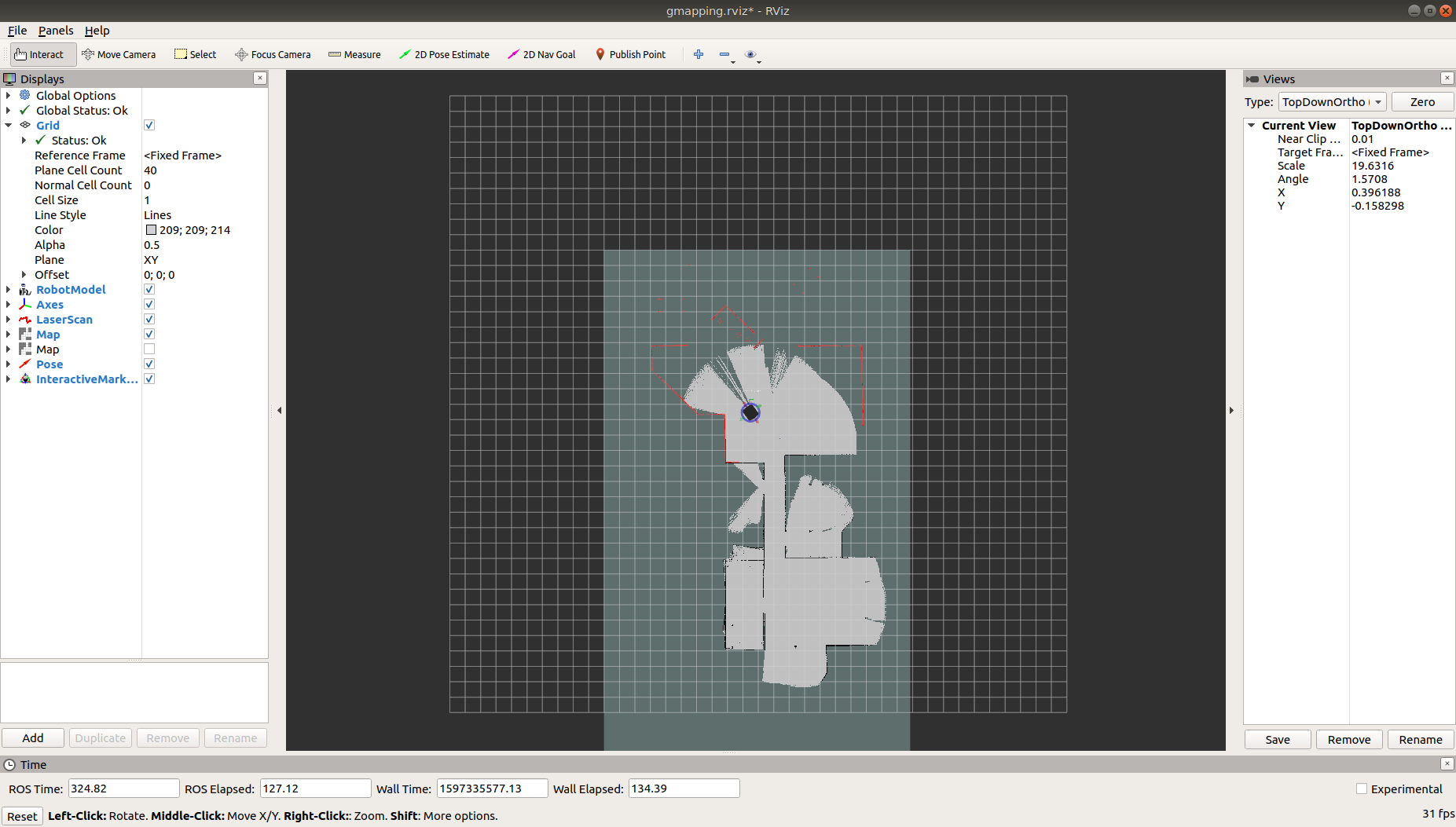

Launching RViz

The next tool we will encounter is RViz. Although superficially similar in appearance to Gazebo, RViz has a very different purpose. Unlike Gazebo, which shows the reality of the simulated world, RViz shows the robot's perception of its world, whether real or simulated. So while Gazebo won't be used with your real Ridgeback, RViz is used with both.

You can use the following launch invocation to start RViz with a predefined configuration suitable for visualizing any standard Ridgeback config.

roslaunch ridgeback_viz view_robot.launch

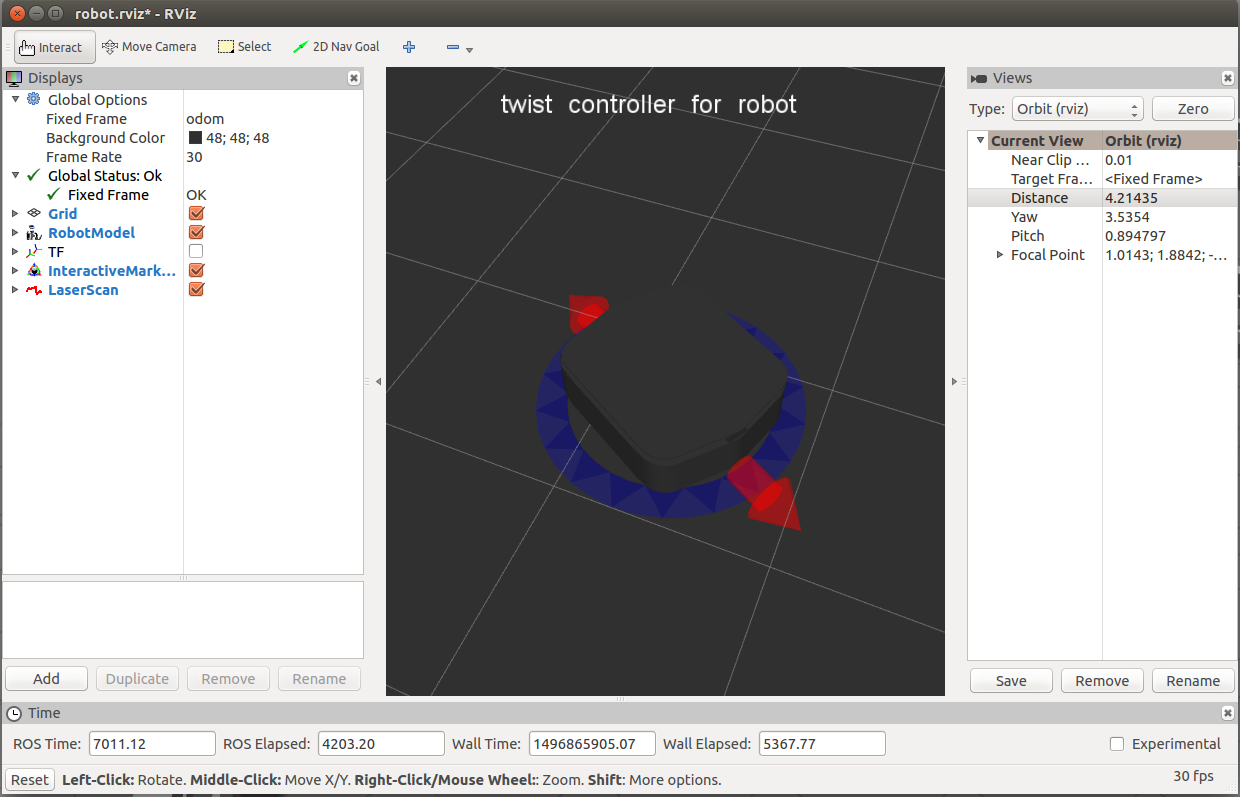

You should see RViz appear.

The RViz display only shows what the robot knows about its world, which presently, is nothing. Because the robot doesn't yet know about the barriers which exist in its Gazebo world, they are not shown here.

Driving with Interactive Controller

RViz will also show Ridgeback's interactive markers around your Ridgeback's model. These will appear as a blue ring and red arrows. Depending on your robot, there will also be green arrows. If you don't see them in your RViz display, select the Interact tool from the top toolbar and they should appear.

Drag the red arrows in RViz to move in the linear X direction, and the blue circle to move in the angular Z direction. If your robot supports lateral/sideways movement, you can drag the green arrows to move in the linear Y direction. RViz shows you Ridgeback moving relative to its odometric frame, but it is also moving relative to the simulated world supplied by Gazebo. If you click over to the Gazebo window, you will see Ridgeback moving within its simulated world. Or, if you drive real Ridgeback using this method, it will have moved in the real world.

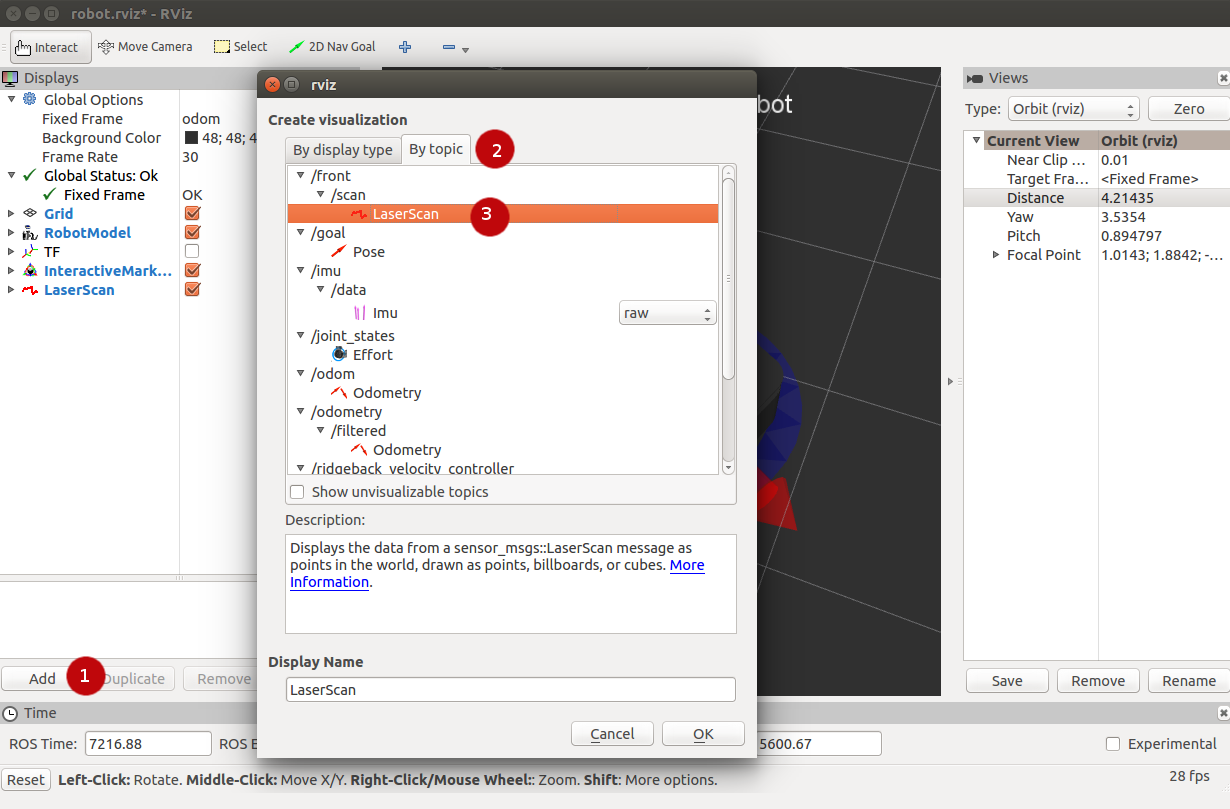

Visualizing Sensors

The RViz tool is capable of visualizing many common robotic sensors, as well as other data feeds which can give us clues as to what the robot is doing and why.

A great place to start with this is adding the LaserScan plugin to visualize the laser scans being produced

by the simulated UST-10LX or LMS-111. In the left panel, click the "Add" button, then select the "Topics" tab, and then select the front/scan topic:

Click "OK" and you should see laser scan points now visible in the RViz window, relative to the robot.

If you use the interactive markers to drive around, you'll notice that the laser scan points move a little bit but generally stay where they are. This is the first step toward map making using Gmapping.

Control

There are three ways to send your Ridgeback control commands:

-

Using the provided PS4 controller. Refer to the User Manual details on how to use the controller.

-

Using the RViz instance above. If you select the Interact option in the top toolbar, an interactive marker will appear around the Ridgeback and can be used to control speed.

-

The rqt_robot_steering plugin. Run the

rqtcommand, and select Plugins→Robot Tools→Robot Steering from the top menu.

Ridgeback uses twist_mux to mix separate geometry_msgs\Twist control channels into the ridgeback_velocity_controller/cmd_vel topic.

Additional velocity channels can be defined in twist_mux.yaml, or can be piped into the lowest-priority cmd_vel topic.

Odometry

Ridgeback publishes odometry information on the odometry/filtered topic, as nav_msgs/Odometry messages.

These are generated by ekf_localization_node, which processes data from several sensor sources using an Extended Kalman filter (EKF).

This includes data from the wheel encoders and IMU (if available).

Additional odometry information sources can be added to the EKF in robot_localization.yaml.

Diagnostics

Diagnostics are only applicable to real Ridgeback robots, not simulation.

Ridgeback provides hardware and software system diagnostics on the ROS standard /diagnostics topic.

The best way to view these messages is using the rqt_runtime_monitor plugin.

Run the rqt command, and select Plugins→Robot Tools→Runtime Monitor from the top menu.

The same information is also published as a ridgeback_msgs\Status message on the Status topic.

Driving Ridgeback

There are four ways to drive Ridgeback and each way will work on a physical Ridgeback robot as well as on a simulated Ridgeback.

- Using the interactive remote controller in RViz. See Simulating Ridgeback.

- Using autonomous navigation. See Navigating Ridgeback.

- Using the controller for teleoperation. See below.

- Publishing ROS messages. See below.

Ridgeback is capable of reaching high speeds. Careless driving can cause harm to the operator, bystanders, the robot, or other property. Always remain vigilant, ensure you have a clear line of sight to the robot, and operate the robot at safe speeds. We strongly recommend driving in normal (slow) mode first, and only enabling turbo in large, open areas that are free of people and obstacles.

Driving with Remote Controller

For instructions on controller pairing, see Pairing the Controller.

When familiarizing yourself with your robot's operation, always hold the left button (L1/LB). Once you are comfortable with how it operates and you are in a large area with plenty of open room, then you can use R1/RB to enable turbo mode.

Differential Drive Robots (Husky, Jackal, Dingo-D, Warthog, Boxer)

To drive your differential drive robot, Axis 0 controls the robot's steering, Axis 1 controls the forward/backward velocity, Button 4 acts as enable, and Button 5 acts as enable-turbo. On common controllers these correspond to the following physical controls:

| Axis/Button | Physical Input | PS4 | F710 | Xbox One | Action |

|---|---|---|---|---|---|

| Axis 0 | Left thumb stick vertical | LJ | LJ | LJ | Drive forward/backward |

| Axis 1 | Left thumb stick horizontal | LJ | LJ | LJ | Rotate/turn |

| Button 4 | Left shoulder button or trigger | L1 | LB | LB | Enable normal speed |

| Button 5 | Right shoulder button or trigger | R1 | RB | RB | Enable turbo speed |

You must hold either Button 4 or Button 5 at all times to drive the robot.

Omnidirectional Robots (Dingo-O, Ridgeback)

To drive your omnidirectional robot, Axis 0 controls the robot's steering, Axis 1 controls the robot's left/right translation, and Axis 2 controls the forward/backward velocity. Button 4 acts as enable, and Button 5 acts as enable-turbo. On common controllers these correspond to the following physical controls:

| Axis/Button | Physical Input | PS4 | F710 | Xbox One | Action |

|---|---|---|---|---|---|

| Axis 0 | Left thumb stick vertical | LJ | LJ | LJ | Drive forward/backward |

| Axis 1 | Left thumb stick horizontal | LJ | LJ | LJ | Translate left/right |

| Axis 2 | Right thumb stick horizontal | RJ | RJ | RJ | Rotate/turn |

| Button 4 | Left shoulder button or trigger | L1 | LB | LB | Enable normal speed |

| Button 5 | Right shoulder button or trigger | R1 | RB | RB | Enable turbo speed (Dingo-O only; not supported on Ridgeback) |

You must hold either Button 4 or Button 5 at all times to drive the robot.

Driving with ROS Messages

You can manually publish geometry_msgs/Twist ROS messages to either the /ridgeback_velocity_controller/cmd_vel or the /cmd_vel ROS topics to drive Ridgeback.

For example, in terminal, run:

rostopic pub /ridgeback_velocity_controller/cmd_vel geometry_msgs/Twist '{linear: {x: 0.5, y: 0.0, z: 0.0}, angular: {x: 0.0, y: 0.0, z: 0.0}}'

The command above makes Ridgeback drive forward momentarily at 0.5 m/s without any rotation.

Extending Ridgeback Startup

Now that you've had Ridgeback for a while, you may be interested in how to extend it, perhaps add some more payloads, or augment the URDF.

Startup Launch Context

When ROS packages are grouped together in a directory and then built as one, the result is referred to as a workspace.

Each workspace generates a setup.bash file which the user may source in order to correctly set up important environment variables such as PATH, PYTHONPATH, and CMAKE_PREFIX_PATH.

The standard system-wide setup file is in /opt:

source /opt/ros/noetic/setup.bash

When you run this command, you'll have access to rosrun, roslaunch, and all the other tools and packages installed on your system from Debian packages.

However, sometimes you want to add additional system-specific environment variables, or perhaps packages built from source.

For this reason, Clearpath platforms use a wrapper setup file, located in /etc/ros:

source /etc/ros/setup.bash

This is the setup file which gets sourced by Ridgeback's background launch job, and in the default configuration, it is also sourced on your login session. For this reason it can be considered the "global" setup file for Ridgeback's ROS installation.

This file sets some environment variables and then sources a chosen ROS workspace, so it is one of your primary modification points for altering how Ridgeback launches.

Launch Files

The second major modification point is the /etc/ros/noetic/ros.d directory.

This location contains the launch files associated with the ros background job.

If you add launch files here, they will be launched with Ridgeback's startup.

However, it's important to note that in the default configuration, any launch files you add may only reference ROS software installed in /opt/ros/noetic.

If you want to launch something from workspace in the home directory, you must change /etc/ros/setup.bash to source that workspace's setup file rather than the one from /opt.

Adding URDF

There are two possible approaches to augmenting Ridgeback's URDF.

The first is that you may simply set the Ridgeback_URDF_EXTRAS environment variable in /etc/ros/setup.bash.

By default, it points to an empty dummy file, but you can point it to a file of additional links and joints which you would like mixed into Ridgeback's URDF (via xacro) at runtime.

The second, more sophisticated way to modify the URDF is to create a new package for your own robot, and build your own URDF which wraps the one provided by ridgeback_description.

Keeping Ridgeback Updated

For details on updating Ridgeback software or firmware, refer to Software Maintenance.

Navigating Ridgeback

To get all Navigation related files for Ridgeback, run:

sudo apt-get install ros-noetic-ridgeback-navigation

Below are the example launch files for three different configurations for navigating Ridgeback:

- Navigation in an odometric frame without a map, using only move_base.

- Generating a map using Gmapping.

- Localization with a known map using amcl.

Referring to the Simulating Ridgeback instructions, bring up Ridgeback with the front laser enabled for the following demos:

roslaunch ridgeback_gazebo ridgeback_world.launch config:=front_laser

If you're working with a real Ridgeback, it's suggested to connect via SSH and launch the ridgeback_navigation launch files from onboard the robot. You'll need to have bidirectional communication with the robot's roscore in order to launch RViz on your workstation (see here).

Navigation without a Map

In the odometry navigation demo Ridgeback attempts to reach a given goal in the world within a user-specified tolerance.

The 2D navigation, generated by move_base, takes in information from odometry, laser scanner, and a goal pose and outputs safe velocity commands.

In this demo the configuration of move_base is set for navigation without a map in an odometric frame (that is, without reference to a map).

To launch the navigation demo, run:

roslaunch ridgeback_navigation odom_navigation_demo.launch

To visualize with the suggested RViz configuration launch:

roslaunch ridgeback_viz view_robot.launch config:=navigation

To send goals to the robot, select the 2D Nav Goal tool from the top toolbar, and then click anywhere in the RViz view to set the position. Alternatively, click and drag slightly to set the goal position and orientation.

If you wish to customize the parameters of move_base, local costmap, global costmap and base_local_planner, clone ridgeback_navigation into your own workspace and modify the corresponding files in the params subfolder.

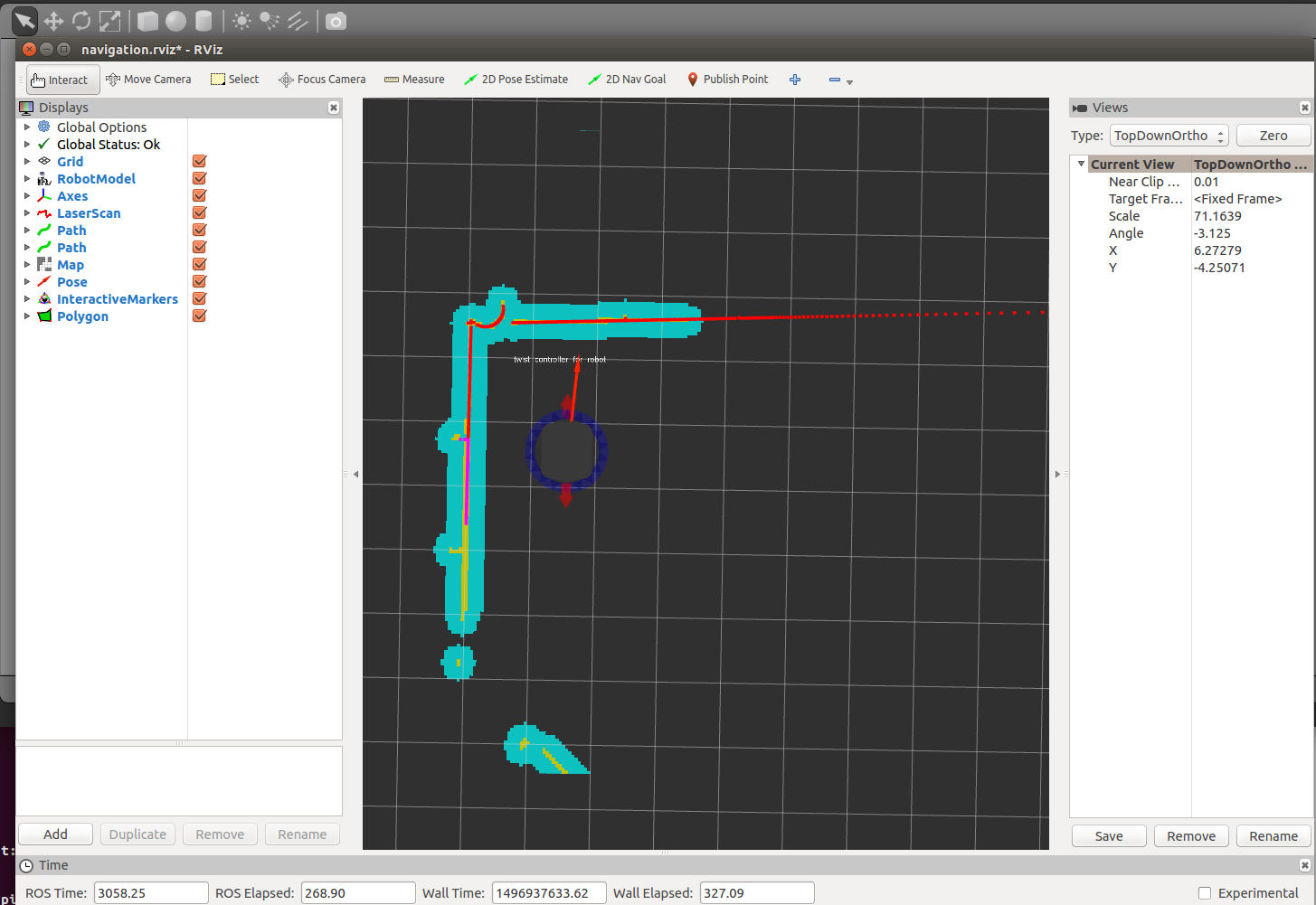

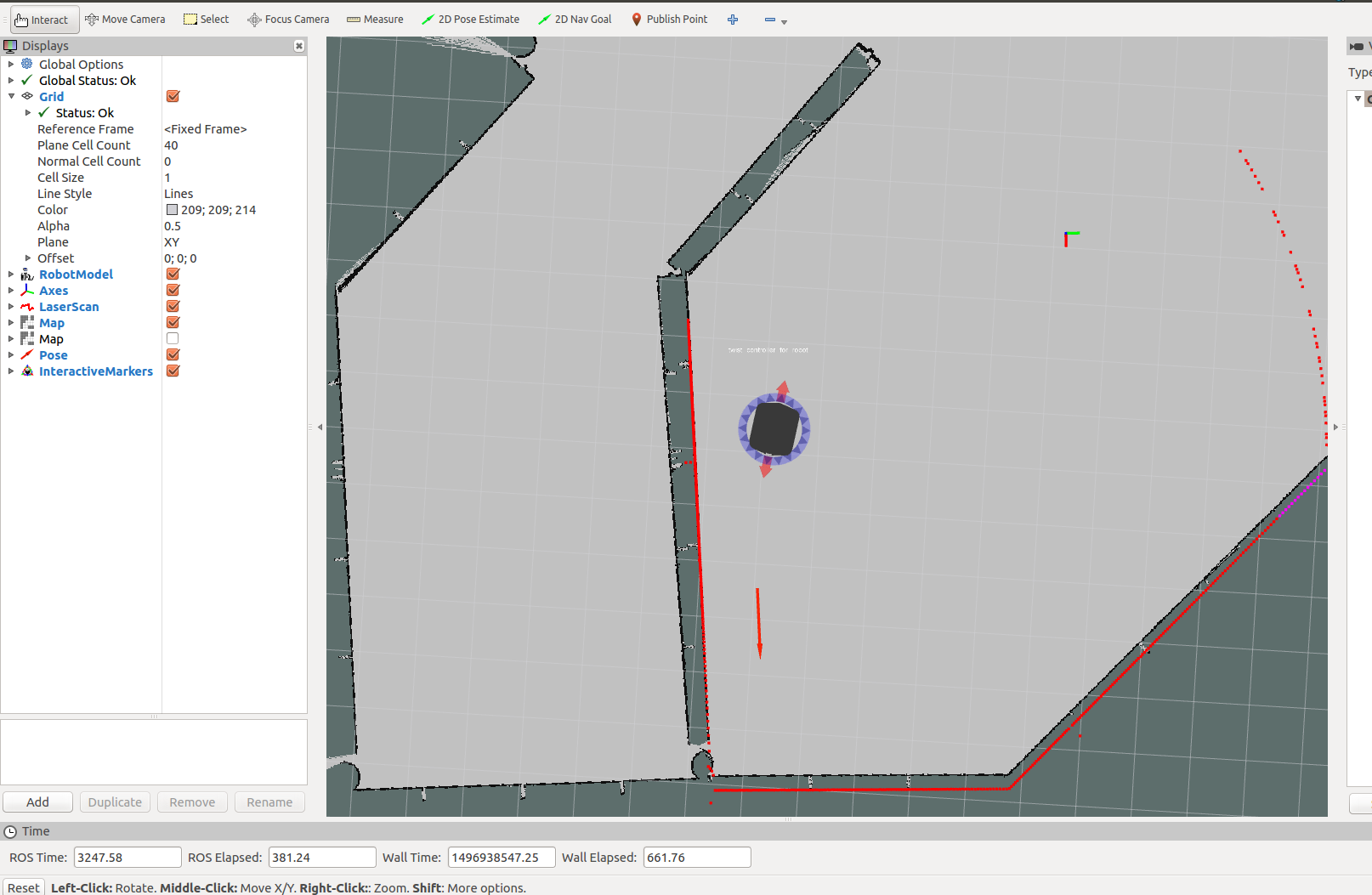

Making a Map

In this demonstration, Ridgeback generates a map using Gmapping. Begin by launch the Gmapping launch file on the robot:

roslaunch ridgeback_navigation gmapping_demo.launch

And on your workstation, launch RViz with the suggested configuration:

roslaunch ridgeback_viz view_robot.launch config:=gmapping

You must slowly drive Ridgeback around to build the map. As obstacles come into view of the laser scanner, they will be added to the map, which is shown in RViz. You can either drive manually using the interactive markers, or semi-autonomously by sending navigation goals (as above).

When you're satisfied, you can save the produced map using map_saver:

rosrun map_server map_saver -f mymap

This will create a mymap.yaml and mymap.pgm file in your current directory.

Navigation with a Map

Using amcl, Ridgeback is able to globally localize itself in a known map. AMCL takes in information from odometry, laser scanner and an existing map and estimates the robot's pose.

To start the AMCL demo:

roslaunch ridgeback_navigation amcl_demo.launch map_file:=/path/to/my/map.yaml

If you don't specify map_file, it defaults to an included pre-made map of the default "Ridgeback Race" environment which Ridgeback's simulator spawns in.

If you're using a real Ridgeback in your own environment, you'll definitely want to override this with the map created using the Gmapping demo.

Before navigating, you need to initialize the localization system by setting the pose of the robot in the map.

This can be done using 2D Pose Estimate in RViz or by setting the amcl initial_pose parameters. To visualize with the suggested RViz configuration launch:

roslaunch ridgeback_viz view_robot.launch config:=localization

When RViz appears, select the Set 2D Pose tool from the toolbar, and click on the map to indicate to the robot approximately where it is.

Ridgeback Tests

Ridgeback robots come preinstalled with a set of test scripts as part of the ridgeback_tests ROS package, which can be run to verify robot functionality at the component and system levels.

If your Ridgeback does not have the ridgeback_tests ROS package installed already, you can manually install it by opening terminal and running:

sudo apt-get install ros-noetic-ridgeback-tests

ROS Tests

The ros_tests script exposes a set of interactive tests to verify the functionality of core features.

These tests run at the ROS-level via ROS topics, and serve as a useful robot-level diagnostic tool for identifying the root cause of problems, or at the very least, narrowing down on where the root cause(s) may be.

Running ROS Tests

To run ros_tests on a Ridgeback robot, open terminal and run:

rosrun ridgeback_tests ros_tests

Upon running ros_tests, a list of available tests will be shown in a menu.

From the menu, you can choose individual tests to run, or simply choose the option to automatically run all the tests.

The details of each test are shown below.

-

Lighting Test

The Lighting Test checks that the robot's lights are working properly.

This test turns the lights off, red, green, and blue (in order) by publishing lighting commands to the

/cmd_lightsROS topic. The user will be asked to verify that the lights change to the expected colours. -

Motion Stop Test

The Motion Stop Test checks that the robot's motion-stop is working properly.

This test subscribes to the

/mcu/statusROS topic and checks that when the motion-stop is manually engaged by the user, the motion-stop state is correctly reported on the/mcu/statusROS topic. The user will be asked to verify that the lights flash red while the motion-stop is engaged. -

ADC Test

The ADC Test checks that the robot's voltage and current values across its internal hardware components are within expected tolerances.

This test subscribes to the

/mcu/statusROS topic and checks that the voltage and current values across the internal hardware are within expected tolerances. -

Rotate Test

The Rotate Test rotates the robot counter clockwise 2 full revolutions and checks that the motors, IMU, and EKF odometry are working properly.

This test:

- Subscribes to the

/imu/dataROS topic to receive angular velocity measurements from the IMU's Gyroscope. These measurements are converted into angular displacement estimations, and the robot will rotate until 2 full revolutions are estimated. - Subscribes to the

/odometry/filteredROS topic to receive angular velocity estimations from the EKF odometry. These measurements are converted into angular displacement estimations, and are output as comparison to the angular displacement estimations from the IMU's Gyroscope. - Publishes to the

/cmd_velROS topic to send drive commands to rotate the robot. - The user will be asked to verify that the robot rotates 2 full revolutions.

noteThe Rotate Test rotates the robot using the IMU's Gyroscope data, which inherently will not be 100% accurate. Therefore, some undershoot/overshoot is to be expected.

- Subscribes to the

-

Drive Test

The Drive Test drives the robot forward 1 metre and checks that the motors, encoders, and encoder-fused odometry are working properly.

This test:

- Subscribes to the

/ridgeback_velocity_controller/odomROS topic to receive linear displacement estimations from the encoder-fused odometry. The robot will drive forward until 1 metre is estimated. - Subscribes to the

/feedbackROS topic to receive linear displacement measurements from the individual encoders. These measurements are output as comparison to the linear displacement estimations from the encoder-fused odometry. - Subscribes to the

/joint_stateROS topic to receive linear displacement measurements from individual the encoders. These measurements are output as comparison to the linear displacement estimations from the encoder-fused odometry. - Publishes to the

/cmd_velROS topic to send drive commands to drive the robot. - The user will be asked to verify that the robot drives forward 1 metre.

noteThe Drive Test drives the robot using the Odometry data, which inherently will not be 100% accurate. Therefore, some undershoot/overshoot is to be expected.

- Subscribes to the

-

Cooling Test

The Cooling Test checks that the fans are working properly.

This test makes the fans spin at different speeds by publishing fan speed commands to the

/mcu/cmd_fansROS topic. The user will be asked to verify that the fans change to the expected speeds.

CAN Bus Test

The check_can_bus_interface script checks that communication between the motors, encoders, robot's MCU, and robot's computer are working properly over the CAN bus interface.

This script verifies that the can0 interface is detected and activated, then proceeds to check the output of candump to verify that good CAN packets are being transmitted.

Running CAN Bus Test

To run the check_can_bus_interface script on a Ridgeback robot, open terminal and run:

rosrun ridgeback_tests check_can_bus_interface

Advanced Topics

Configuring the Network Bridge

Your computer is configured to bridge its physical network ports together. This allows any network port to be used

as a connection to the internal 192.168.131.1/24 network for connecting sensors, diagnostic equipment, or

manipulators, or for connecting the your robot to the internet for the purposes of installing updates.

In the unlikely event you must modify your robot's Ethernet bridge, you can do so by editing the configuration

file found at /etc/netplan/50-clearpath-bridge.yaml:

# Configure the wired ports to form a single bridge

# We assume wired ports are en* or eth*

# This host will have address 192.168.131.1

network:

version: 2

renderer: networkd

ethernets:

bridge_eth:

dhcp4: no

dhcp6: no

match:

name: eth*

bridge_en:

dhcp4: no

dhcp6: no

match:

name: en*

bridges:

br0:

dhcp4: yes

dhcp6: no

interfaces: [bridge_en, bridge_eth]

addresses:

- 192.168.131.1/24

This file will create a bridged interface called br0 that will have a static address of 192.168.131.1,

but will also be able to accept a DHCP lease when connected to a wired router. By default, all network

ports named en* and eth* are added to the bridge. This includes all common wired port names, such as:

eth0, eno1, enx0123456789ab, enp3s0, etc.

To include/exclude additional ports from the bridge, edit the match fields, or add additional bridge_*

sections with their own match fields, and add those interfaces to the interfaces: [bridge_en, bridge_eth]

line near the bottom of the file.

We do not recommend changing the static address of the bridge to be anything other than 192.168.131.1;

changing this may cause sensors that communicate over Ethernet (e.g. lidars, cameras, GPS arrays) from

working properly.

See also Network IP Addresses for common IP addresses on Clearpath robots.

Additional Simulation Worlds

In addtion to the default ridgeback_world.launch file, ridgeback_gazebo also contains

spawn_ridgeback.launch, which is intended to be included in any custom world to add a

Ridgeback simulation to it.

To add a Ridgeback to any of your own worlds, simply include the spawn_ridgeback.launch file in your own world's launch:

<include file="$(find ridgeback_gazebo)/launch/spawn_ridgeback.launch">

<!-- Optionally configure the spawn position -->

<arg name="x" value="$(arg x)"/>

<arg name="y" value="$(arg y)"/>

<arg name="z" value="$(arg z)"/>

<arg name="yaw" value="$(arg yaw)"/>

</include>

Finally, Clearpath provides an additional suite of simulation environments that can be downloaded separately and used with Ridgeback, as described below.

Clearpath Gazebo Worlds

The Clearpath Gazebo Worlds collection contains 4 different simulation worlds, representative of different environments our robots are designed to operate in:

- Inspection World: a hilly outdoor world with water and a cave

- Agriculture World: a flat outdoor world with a barn, fences, and solar farm

- Office World: a flat indoor world with enclosed rooms and furniture

- Construction World: office world, under construction with small piles of debris and partial walls

Ridgeback is supported in the Office and Construction worlds.

Installation

To download the Clearpath Gazebo Worlds, clone the repository from github into the same workspace as your Ridgeback:

cd ~/catkin_ws/src

git clone https://github.com/clearpathrobotics/cpr_gazebo.git

Before you can build the package, make sure to install dependencies.

Because Clearpath Gazebo Worlds depends on all of our robot's simulation packages, and some of these are currently only

available as source code, installing dependencies with rosdep install --from-paths [...] will likely fail.

To simulate Ridgeback in the Office and Construction worlds the only additional dependency is the gazebo_ros package.

Once the dependencies are installed, you can build the package:

cd ~/catkin_ws

catkin_make

source devel/setup.bash

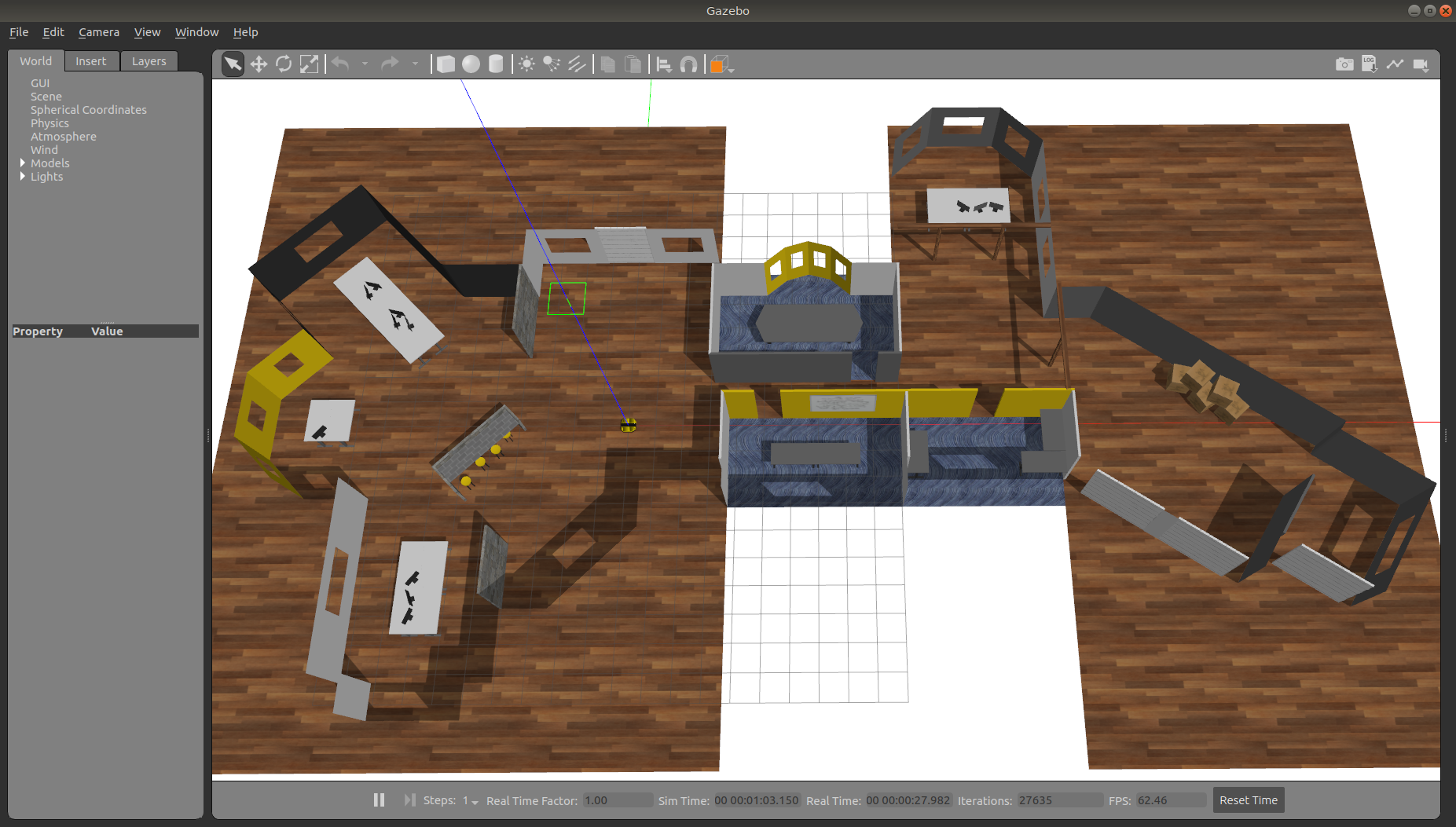

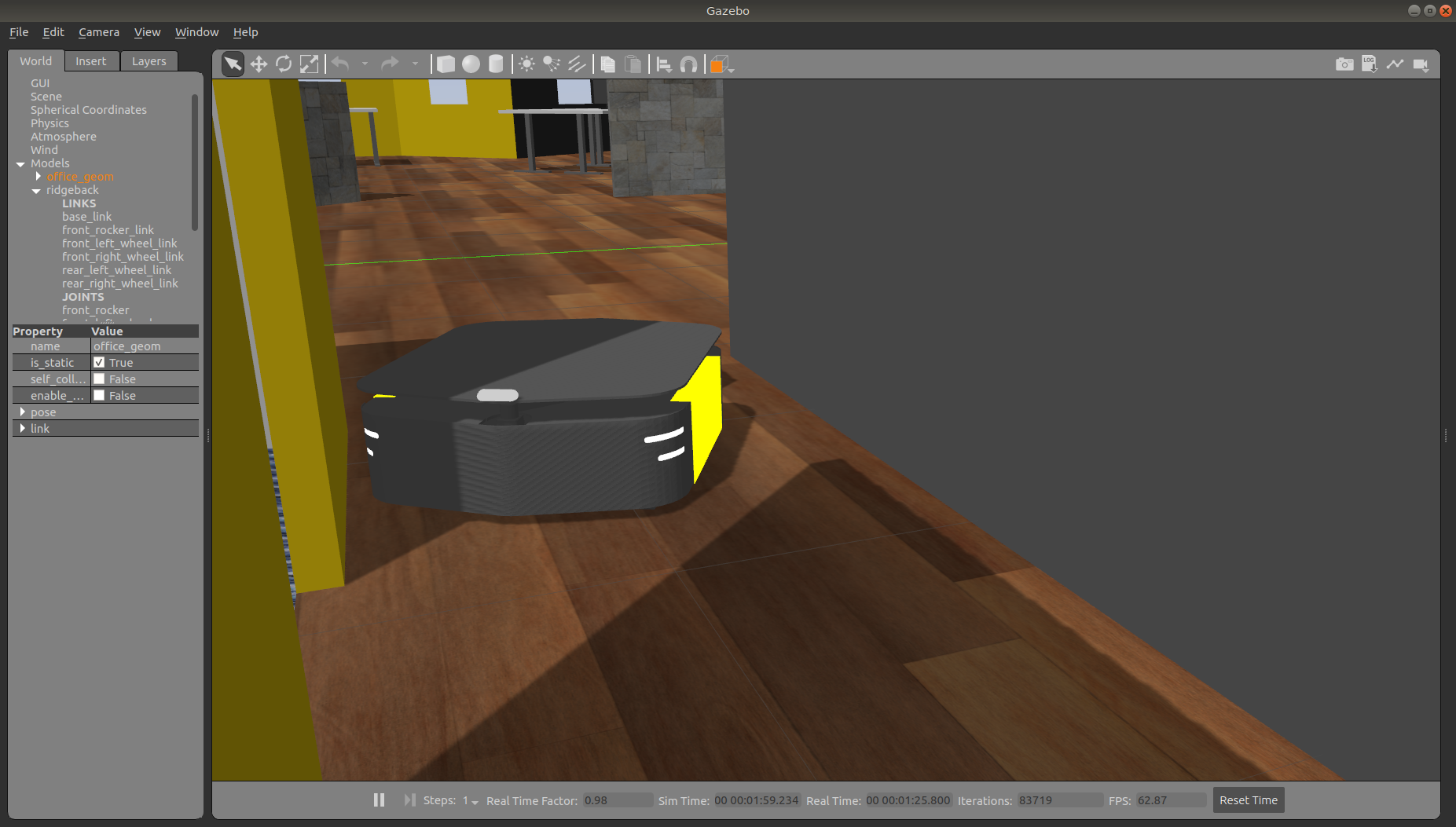

Running the Office Simulation

Office World is a small indoor environment representing a commercial office space. It features several large, open areas with furniture, as well as a narrow hallway with smaller offices and meeting rooms. It is intended to simulate missions in commercial spaces, such as facilitating deliveries, security monitoring, and inspecting equipment.

To launch Office World with a Ridgeback, run the following command:

roslaunch cpr_office_gazebo office_world.launch platform:=ridgeback

You should see Ridgeback spawn in the office world, as pictured.

To customize Ridgeback's payload, for example to add additional sensors, see Customizing Ridgeback's Payload.

Once the simulation is running you can use RViz and other tools as described

in Simulating Ridgeback and

Navigating Ridgeback to control and monitor the robot.

For example, below we can see the gmapping_demo from ridgeback_navigation

being used to build a map of the office world:

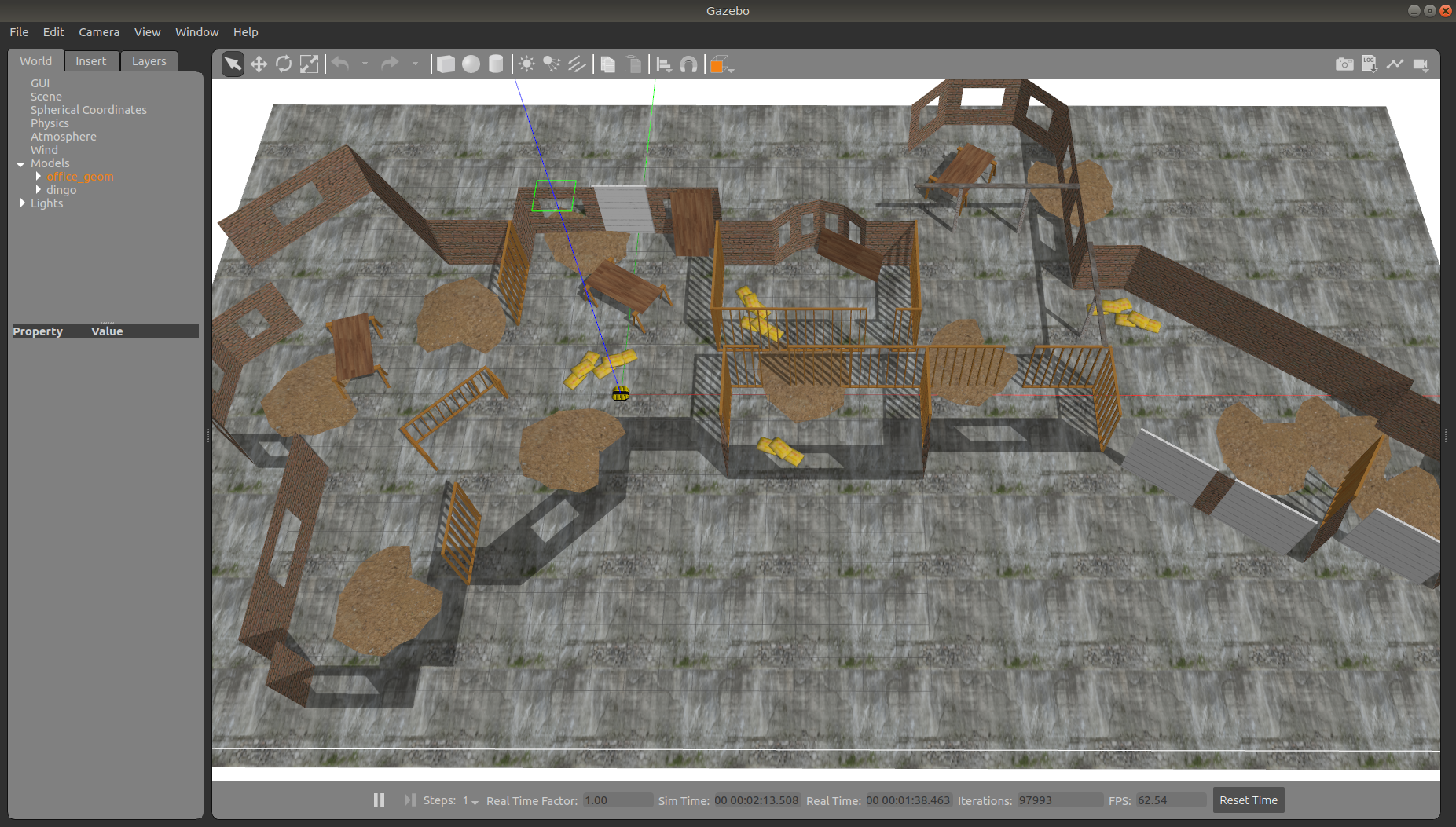

You can see the complete layout of the office below:

Running the Construction Simulation

Construction World is the same basic layout as Office World, representing the same office space under construction/renovation. It is an indoor environment with small hills of debris/rubble, partial walls, and piles of construction supplies. It is designed to simulate missions in any sort of construction site.

To launch Construction World with a Ridgeback, run the following command:

roslaunch cpr_office_gazebo office_construction_world.launch platform:=ridgeback

You should see Ridgeback spawn in the construction world, as pictured.

To customize Ridgeback's payload, for example to add additional sensors, see Customizing Ridgeback's Payload.

Once the simulation is running you can use RViz and other tools as described in Simulating Ridgeback and Navigating Ridgeback to control and monitor the robot.

You can see the complete layout of the office below:

Customizing Ridgeback's Payload

To customize Ridgeback's payload you must use the environment variables described in ridgeback_description. For example, to equip Ridgeback with a Sick LMS-111 lidar, as pictured in several of the images above, run

export RIDGEBACK_LASER=1

before launching the simulation world.

You can also add additional sensors by creating a customized URDF and setting the RIDGEBACK_URDF_EXTRAS environment

variable to point to it.

For example, let's suppose you want to equip Ridgeback with an Intel RealSense D435 camera. First, install the realsense2_camera

and realsense2_description packages, along with the gazebo plugins:

sudo apt-get install ros-$ROS_DISTRO-realsense2-camera ros-$ROS_DISTRO-realsense2-description ros-$ROS_DISTRO-gazebo-plugins

Then create your customized URDF file, for example $HOME/Desktop/realsense.urdf.xacro. Put the following in it:

<?xml version="1.0"?>

<robot xmlns:xacro="http://ros.org/wiki/xacro">

<link name="front_realsense" />

<!--

The gazebo plugin aligns the depth data with the Z axis, with X=left and Y=up

ROS expects the depth data along the X axis, with Y=left and Z=up

This link only exists to give the gazebo plugin the correctly-oriented frame

-->

<link name="front_realsense_gazebo" />

<joint name="front_realsense_gazebo_joint" type="fixed">

<parent link="front_realsense"/>

<child link="front_realsense_gazebo"/>

<origin xyz="0.0 0 0" rpy="-1.5707963267948966 0 -1.5707963267948966"/>

</joint>

<gazebo reference="front_realsense">

<turnGravityOff>true</turnGravityOff>

<sensor type="depth" name="front_realsense_depth">

<update_rate>30</update_rate>

<camera>

<!-- 75x65 degree FOV for the depth sensor -->

<horizontal_fov>1.5184351666666667</horizontal_fov>

<vertical_fov>1.0122901111111111</vertical_fov>

<image>

<width>640</width>

<height>480</height>

<format>RGB8</format>

</image>

<clip>

<!-- give the color sensor a maximum range of 50m so that the simulation renders nicely -->

<near>0.01</near>

<far>50.0</far>

</clip>

</camera>

<plugin name="kinect_controller" filename="libgazebo_ros_openni_kinect.so">

<baseline>0.2</baseline>

<alwaysOn>true</alwaysOn>

<updateRate>30</updateRate>

<cameraName>realsense</cameraName>

<imageTopicName>color/image_raw</imageTopicName>

<cameraInfoTopicName>color/camera_info</cameraInfoTopicName>

<depthImageTopicName>depth/image_rect_raw</depthImageTopicName>

<depthImageInfoTopicName>depth/camera_info</depthImageInfoTopicName>

<pointCloudTopicName>depth/color/points</pointCloudTopicName>

<frameName>front_realsense_gazebo</frameName>

<pointCloudCutoff>0.105</pointCloudCutoff>

<pointCloudCutoffMax>8.0</pointCloudCutoffMax>

<distortionK1>0.00000001</distortionK1>

<distortionK2>0.00000001</distortionK2>

<distortionK3>0.00000001</distortionK3>

<distortionT1>0.00000001</distortionT1>

<distortionT2>0.00000001</distortionT2>

<CxPrime>0</CxPrime>

<Cx>0</Cx>

<Cy>0</Cy>

<focalLength>0</focalLength>

<hackBaseline>0</hackBaseline>

</plugin>

</sensor>

</gazebo>

<link name="front_realsense_lens">

<visual>

<origin xyz="0.02 0 0" rpy="${pi/2} 0 ${pi/2}" />

<geometry>

<mesh filename="package://realsense2_description/meshes/d435.dae" />

</geometry>

<material name="white" />

</visual>

</link>

<joint type="fixed" name="front_realsense_lens_joint">

<!-- Offset the camera 45cm forwards and 1cm up -->

<origin xyz="0.45 0 0.01" rpy="0 0 0" />

<parent link="mid_mount" />

<child link="front_realsense_lens" />

</joint>

<joint type="fixed" name="front_realsense_joint">

<origin xyz="0.025 0 0" rpy="0 0 0" />

<parent link="front_realsense_lens" />

<child link="front_realsense" />

</joint>

</robot>

This file defines the additional links for adding a RealSense camera to the robot, as well as configuring the openni_kinect

plugin for Gazebo to simulate data from a depth camera. The camera itself will be connected to the Ridgeback's front_mount

link, offset 5cm towards the front of the robot.

Now, set the RIDGEBACK_URDF_EXTRAS environment variable and try viewing the Ridgeback model:

export RIDGEBACK_URDF_EXTRAS=$HOME/Desktop/realsense.urdf.xacro

roslaunch ridgeback_viz view_model.launch

You should see the Ridgeback model in RViz, with the RealSense camera mounted to it:

To launch the customized Ridgeback in any of the new simulation environments, similarly run:

export RIDGEBACK_URDF_EXTRAS=$HOME/Desktop/realsense.urdf.xacro

roslaunch cpr_office_gazebo office_world.launch platform:=ridgeback

You should see Ridgeback spawn in the office world with the RealSense camera:

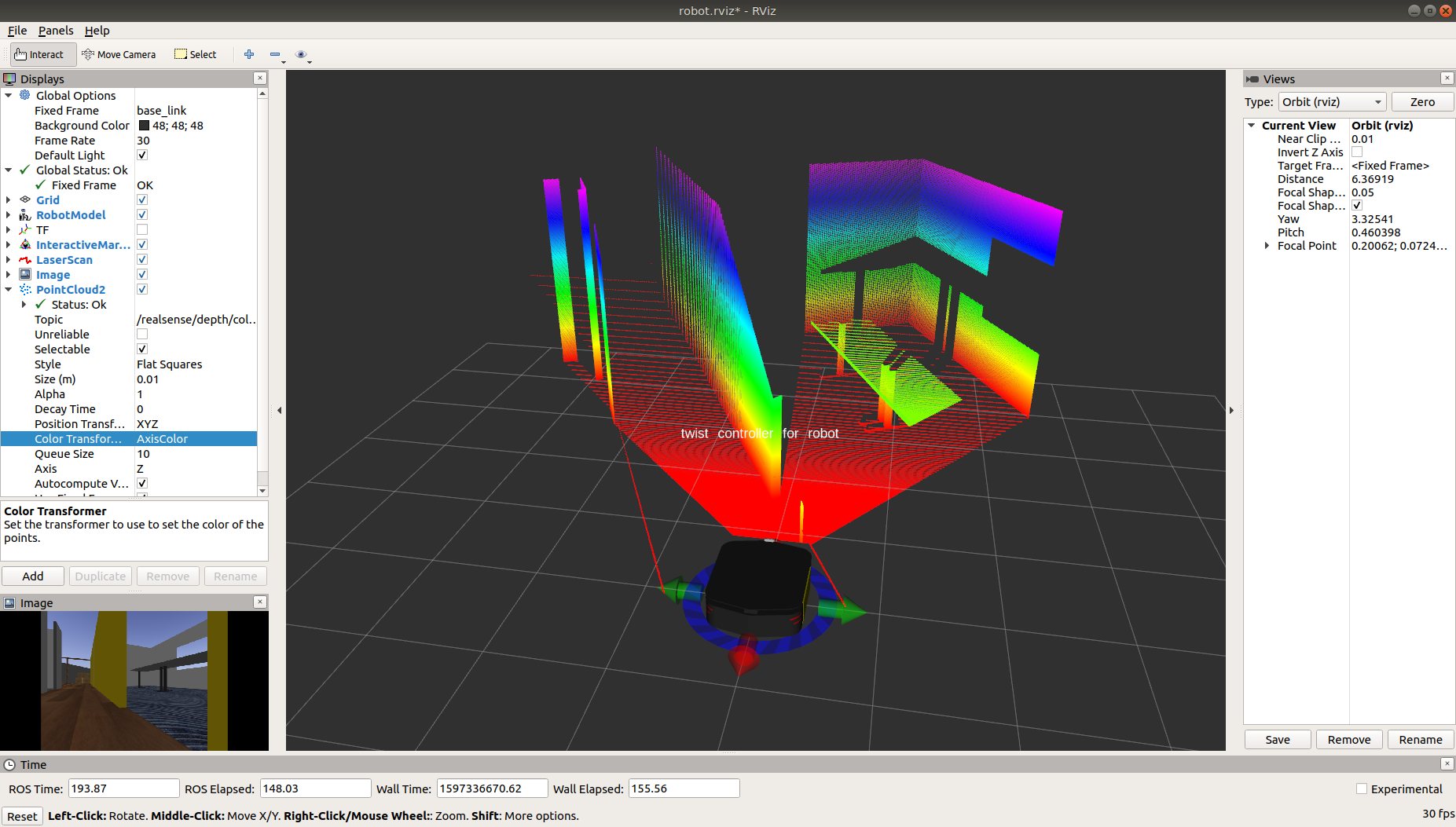

You can view the sensor data from the RealSense camera by running

roslaunch ridgeback_viz view_robot.launch

and adding the camera & pointcloud from the /realsense/color/image_raw and /realsense/depth/color/points topics:

Support

Clearpath is committed to your success. Please get in touch with us and we will do our best to get you rolling again quickly: <support@clearpathrobotics.com>.

To get in touch with a salesperson regarding Clearpath Robotics products, please email <research-sales@clearpathrobotics.com>.

If you have an issue that is specifically about ROS and is something which may be of interest to the broader community, consider asking it on Robotics Stack Exchange. If you do not get a satisfactory response, please ping us and include a link to your question as posted there. If appropriate, we will answer on Robotics Stack Exchange for the benefit of the community.